Overview

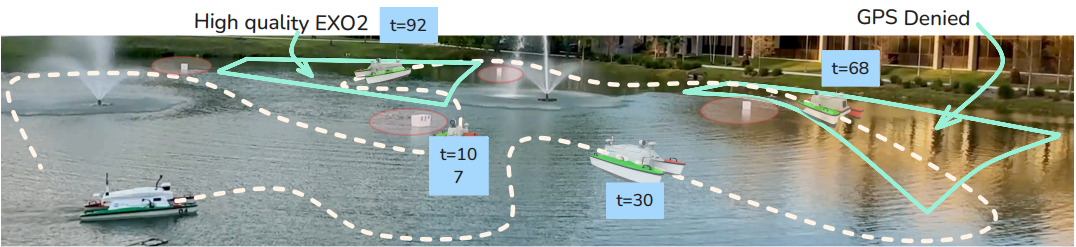

Robots equipped with rich sensor suites can localize reliably in partially-observable environments, but powering every sensor continuously is wasteful and often infeasible. Belief-space planners address this by propagating pose-belief covariance through analytic models and switching sensors heuristically–a brittle, runtime-expensive approach. Data-driven approaches–including diffusion models–learn multi-modal trajectories from demonstrations, but presuppose an accurate, always-on state estimate.

Research Goals

- Choose a minimal subset of sensors

- Create semantic maps for any available sensor

- Generate a sensor agnostic map representation

- Localize and Navigate with minimal sensing capabilities

Related Publications

- Puthumanaillam, G., Penumarti, A., Vora, M., Padrao, P., Fuentes, J., Bobadilla, L., Shin, J., & Ornik, M., “Belief-Conditioned One-Step Diffusion: Real-Time Trajectory Planning with Just-Enough Sensing,” Accepted to 9th Conference on Robot Learning (CoRL) as Oral Presentation

Research Team

UF Team: Aditya Penumarti, Jane Shin External Collaborators: Gokul Puthumanaillam, Manav Vora, Paulo Padrao, Jose Fuentes, Leonardo Bobadilla, Melkior Ornik

Acknowledgement

This work is completed in collaboration with University of Illinois - Urbana Champaign, Florida International University and Providence University.